Building Your Own Tools: From VFX Artist to Developer

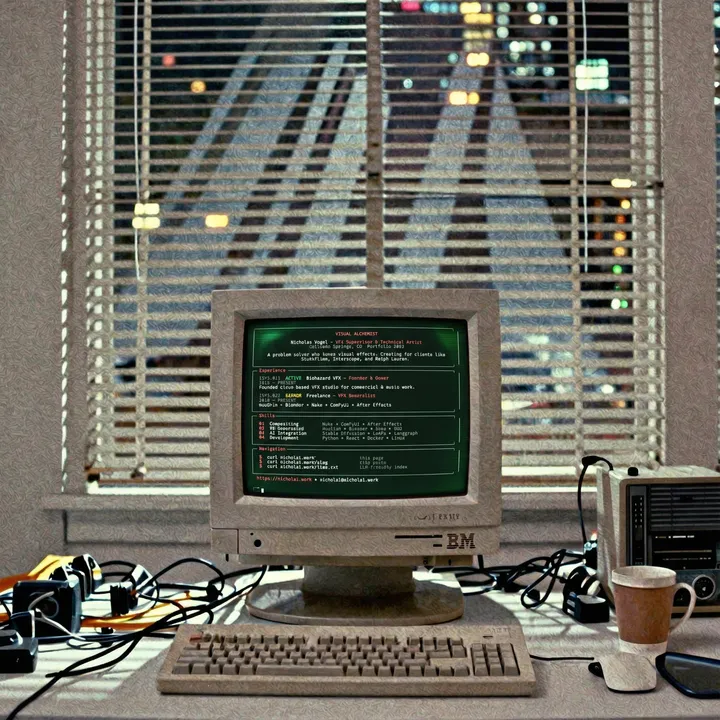

Self-Hosting and AI Development in 2024: Why I build custom software instead of using cloud solutions, and how you can too without being a hardcore developer.

What’s the deal?

I am a VFX Artist by trade and up until recently, never considered myself to be a developer.

Just two years ago; the extent of my development work consisted of writing basic python and simple bash for artistic tools in Nuke, fiddling with a basic html + css website and managing my company’s infrastructure. (Nextcloud, Gitea, n8n).

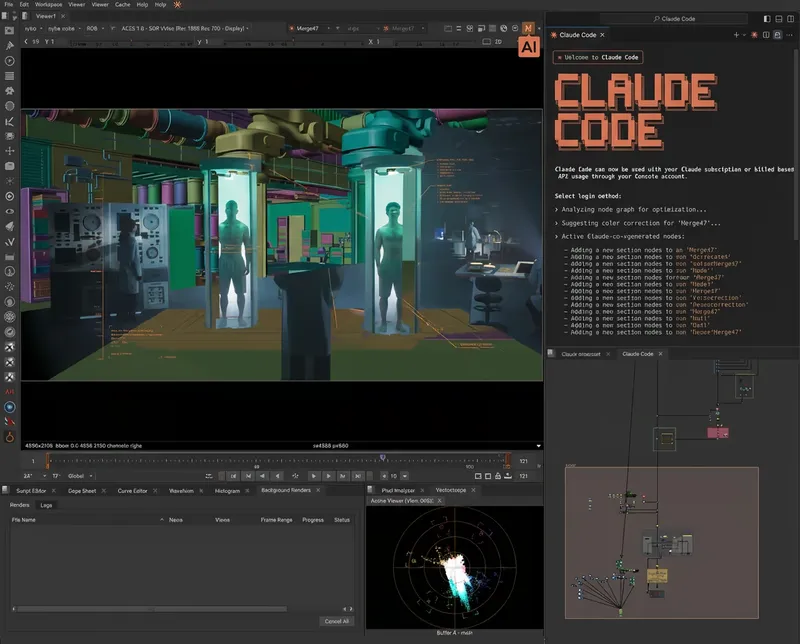

One recent example: we integrated AI tools into a high-profile brand film for G-Star Raw’s Olympics campaign, using Stable Diffusion for concept exploration and AI-generated normal maps for relighting. The full case study is here, and it demonstrates how AI can augment traditional VFX workflows when treated as a tool, not a replacement.

But since August of 2024 things have started to change rapidly, both in the world but also in my life:

- I switched to Linux (Arch, btw)

- AI switched from an interesting gimmick to a real tool in the software development world.

And since then, more and more I find myself spending my time creating my own tools and software ecosystems, working with LLM’s to write code, documents and streamline workflows.

The Cloud Trap

Look, I get it. Cloud services are convenient. Google Drive, Notion, Motion - they all work great out of the box. Low barrier to entry, no server knowledge required, just sign up and go. There’s real value in that.

But here’s the thing: everything’s becoming a subscription. More importantly, we have zero control over what these companies do with our data. For some people that’s fine. For me? Not so much.

When you add it all up - cloud storage, document editing, video review, streaming, calendar, notes, AI task management - you’re looking at hundreds of dollars a month. And you’re still locked into their ecosystem, playing by their rules.

The Speed Factor

I learned to code in high school. Started with Java, made some basic games, wrote little scripts here and there. It was fun being able to build things, but there was always this massive wall: time.

Even professional developers with 20 years of experience could only automate so much in a 2-hour window. Want to build something actually useful? That’s weeks of work. Want to build a Google Drive replacement? See you in two years, if you’re lucky.

And that’s assuming you don’t accumulate a mountain of tech debt halfway through that forces you to refactor everything. Which, let’s be honest, you probably will.

This is why I never seriously considered building my own tools. The math just didn’t work.

Then Everything Changed

Now we have AI that can generate code in a fraction of the time it used to take. I’m not talking about autocomplete. I’m talking about entire features, complex integrations, full applications.

My role has shifted. I’m less of a hands-on coder now and more of an orchestrator, somewhere between a developer and a product manager. Do I miss writing code sometimes? Yeah. Has it probably made me a worse programmer in some ways? Probably. But I’m also building more than I ever have, and the tools I’m creating are genuinely useful to me.

I’ve written n8n automations that would’ve taken weeks before. Now I knock them out in a weekend. I’ve integrated Nextcloud with Gitea, set up CalDAV sync, built email parsing agents. Things that used to feel impossible are now just normal Saturday projects.

Design First, Code Second

As a VFX artist, I care deeply about how things look and feel. The visual design, the user experience - that’s where I get my real enjoyment. I’ve never enjoyed writing database schemas or building auth flows (does anyone?), but I’ve always loved figuring out how to make a contact form feel special, how to make a user say “wow.”

Now I can focus on exactly that. I sketch in Figma, prototype in HTML, figure out exactly what I want things to look like, then I hand it off to AI agents to build. They handle the implementation, I handle the vision.

This approach has taught me more about communication and project management than anything else. Getting AI to build what you actually want requires clear, detailed specifications. Turns out, humans might not always appreciate that communication style, but LLMs love it.

This orchestration mindset extends beyond software development. When I needed to run proprietary VFX render farm software on Arch Linux, I approached it systematically—debugging library dependencies, understanding linker search paths, and documenting the process for others. The full technical deep-dive is in How to use Fox Renderfarm on Arch Linux, and it exemplifies the same methodical problem-solving I apply to AI-assisted development.

Context Engineering (Not Vibe Coding)

Here’s where things get interesting. Early on, I noticed that AI agents perform dramatically better when you give them thorough documentation and context. I started providing screenshots, copying relevant documentation, giving detailed examples, basically treating them like junior developers who needed proper onboarding.

The results were night and day. I was one-shotting complex applications that I wasn’t seeing anyone else build online. That’s when I discovered people had already coined terms for this: “context engineering,” frameworks like BEMAD, all these approaches that were suddenly becoming buzzwords.

Some people call this “vibe coding.” I don’t love that term. It sounds too casual for what’s actually a fairly rigorous process. I’m not just throwing vibes at an AI and hoping for the best. I’m orchestrating a team of agents with clear specifications and detailed context.

The difference is that I actually enjoy providing that context. Before I make any point, I naturally provide extensive background. Humans sometimes find this tedious. LLMs? They thrive on it. And statistically, this approach gets better results.

Building What I Actually Want

Here’s what it comes down to: I don’t want to use Motion. I don’t want to pay for Notion. I like the systems I already have - Nextcloud, Obsidian, Gitea - and those products don’t integrate with them anyway.

So I’m building my own replacement. A unified system that connects everything I actually use into one framework, with an AI agent that integrates into every relevant part of my workflow. Not something I have to explicitly prompt, but something with actual agency that helps me plan, make decisions, track communications, and get my time back.

Two years ago, saying “I’m building a Notion replacement” would’ve sounded delusional. Today? It just requires proper planning and time allocation.

That’s the shift. We’ve gone from “this is impossible” to “this is just a weekend project if I plan it right.”

I recently rebuilt my personal website using Astro as a testbed for these experiments. The site has become my sandbox for trying new patterns, deploying edge computing features, and testing AI integrations without client constraints. If you’re interested in the technical implementation, I wrote about building with Astro as an experimentation platform.

To test the limits of autonomous AI exploration, I recently conducted a 30-day ecosystem experiment where Claude Opus 4.5 had persistent filesystem access and minimal constraints. The resulting 1,320+ artifacts demonstrate what happens when AI systems are given freedom to explore without explicit goals—exactly the kind of unconventional project I wouldn’t have attempted two years ago.

And honestly? I’m excited to see where this goes. The next few years are going to be wild.